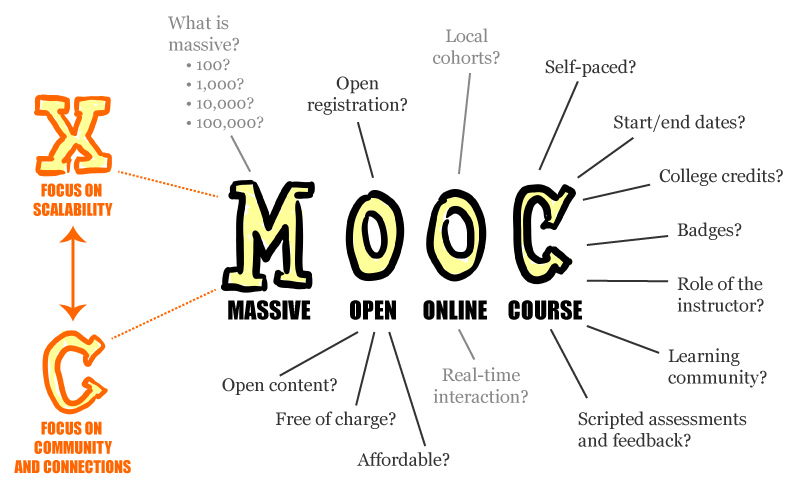

There are still people that talk about xMOOCs and cMOOCs. My response is that the MOOC world has involved and that distinction is so 2012. In the European Journal of Open, Distance and E-learning I read an interesting article that tries to define a MOOC taxonomy based on literature.

The authors identified 13 different categories, which cover information provided to learners before entering a course. These categories are then compared and combined with classifications from the literature to create a taxonomy centred round eight terms: Massive (e.g. enrolments), Open (e.g. pre-requisites), Online (e.g. Timings), Assessment, Pedagogy (e.g. instructor-led), Quality (e.g. reviews), Delivery (e.g. educators), Subject (e.g. Syllabus).

They not only looked at how the different MOOC providers are categorizing their MOOCs, but also the MOOC agregators, such as ClassCentral. They are even more focused on the learners.

The taxonomy they proposed in the article:

| Term |

Description |

Example Fields |

| Massive |

A set of numeric data related to the MOOC |

- Enrolments

- Retention

- Engagement

|

| Open |

Aspects that contribute to the openness of the course |

- Pre-requisites

- Costs

- Course Language

- Aspects of diversity

|

| Online |

Aspects relating to delivery via the internet |

- Platform used to provide the course

- Timing aspects

- Use of multimedia

- Accessibility (diversity)

|

| Aspects of course, including areas listed to the left in Italics: |

| Assessment |

|

- Certification

- Mode of assessment

|

| Pedagogy |

|

- Connectionist / cMOOC

- Instructor led / xMOOC

|

| Quality |

|

- Quality Assurance

- Reviews

- Ratings

|

| Delivery |

|

- Educators

- Institution

- Supporters

|

| Subject |

|

- Brief description

- Syllabus

- Subject area

|

Conclusion

I do agree that we need a more extensive taxonomy for MOOCs, but I also still see characteristics missing in this taxonomy. A couple of comments:

- Openness is more than the listed example fields. Especially the open license is missing for me

- For pedagogy they fall back to the c and x and nowadays the pedagogy of most moocs is much more complex. Often learners can choose I active they engage in the course

- I missing the aspect of teacher presence in the course. Is it a self-paced course with no involvement of the educators or are they actively involved in the discussion, but also giving feedback on assignments.

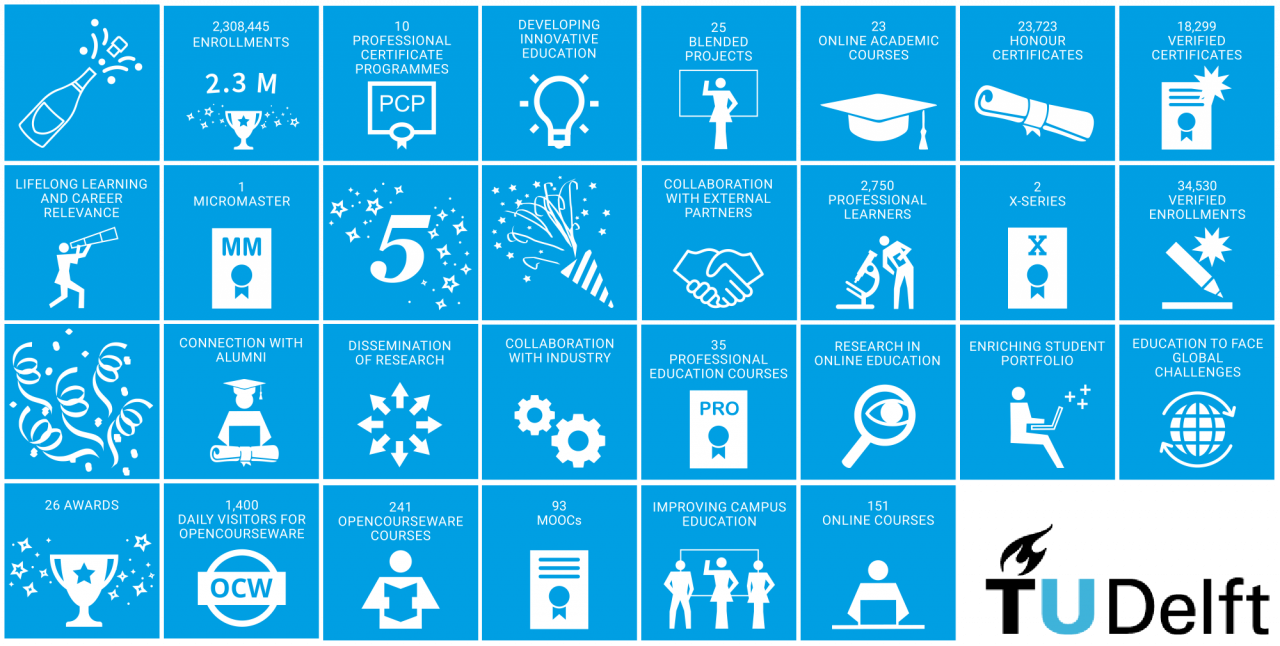

- Many MOOCs are now part of short learning programmes, such as MicroMasters, PCP of edX and specialisations of Coursera.

Although I think it is excellent that the field works on a MOOC taxonomy, the field is also still in development. This means that any taxonomy will be also behind on the current state. The big question is: is a MOOC taxonomy still relevant, now we see that MOOCs are more and more just regular online courses? Many MOOCs are not massive or open anymore.

Reference

Liyanagunawardena, T. R., Lundqvist, K., Mitchell, R., Warburton, S. and Williams, S. A. (2019) A MOOC taxonomy based on classification schemes of MOOCs. European Journal of Open, Distance and E-Learning, 22 (1). pp. 85-103. ISSN 1027-5207

Image CC-BY Mathieu Plourde